Improving Family Medicine Residency Evaluation by Revamping the Clinical Competence Committee Process

by Danielle L. Terry, PhD, ABPP, Guthrie Family Medicine Residency Program

Background

The clinical competency committee (CCC) is integral to resident evaluation and graduate medical education. The CCC is a required component of Accreditation Council for Graduate Medical Education (ACGME) Common Program Requirements.1 Educators regularly cite difficulties with efficiency, interrater reliability, lack of transparency, limited evaluation data, and evaluation bias as a threat to the integrity of the learning environment.2 The Guthrie Family Medicine Residency Program consistently received negative survey feedback regarding transparency and quality feedback to residents. Faculty voiced discontent with process efficiency and faculty bias, and there was no identified structure to meetings. This programmatic overhaul aimed to improve efficiency, standardize the CCC evaluation process with the establishment of evaluation markers, and improve transparency and delivery of resident performance feedback.

Intervention

Participants included 16 medical residents and 8 family medicine faculty members from part of a rural Pennsylvania residency program, the Guthrie Family Medicine Residency Program. Process changes included development and use of an electronic evaluation form that contained eight assessment domains collaboratively identified by core faculty, a standardized meeting protocol, and scheduled feedback meetings with the CCC chair following evaluation. Processes adhered to guidelines suggested by the ACGME Guidebook for Programs.

Assessment domains. Assessment domains were established during two collaborative meetings in which the CCC chair proposed domains based on available evaluation data and graduation requirements. Domains were appropriately modified after discussion. Assessment domains included were encounter numbers, procedures completed, learning modules completed, scholarly activity, milestones (examined two times per year), patient feedback, written evaluations by supervisors, completion of Step 3 exam. Dichotomous (yes/no/NA) questions were included to determine whether a resident was at the expected performance level based on year in program. Open-ended boxes allowed an advisor to elaborate further regarding domains that needed additional explanation, and multiple-selection checklists were used to aid advisors in interpreting narrative evaluation data.

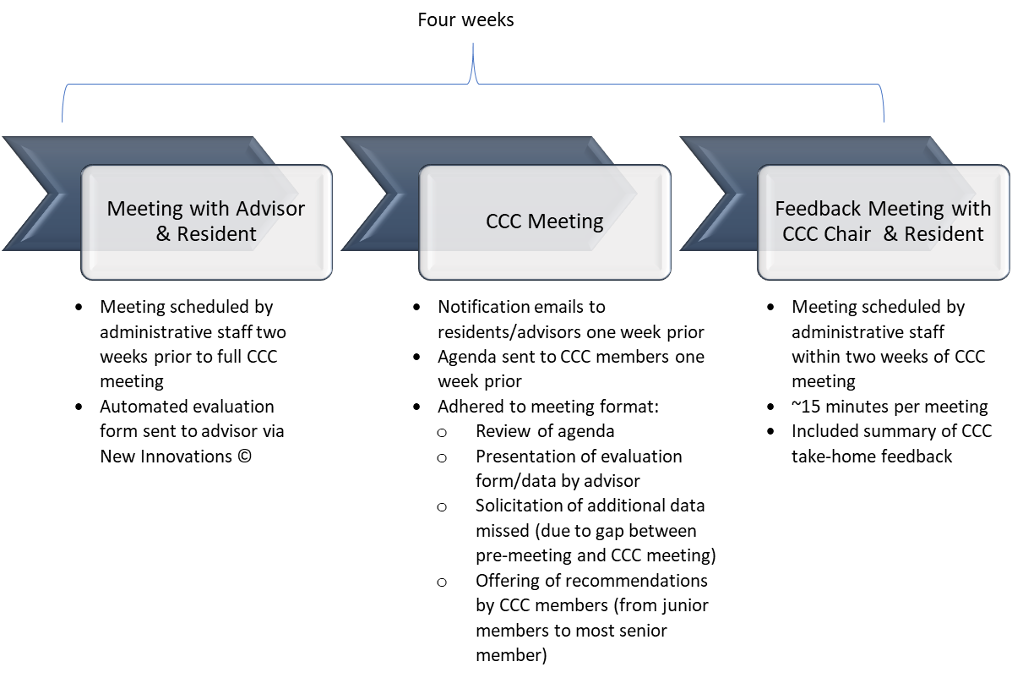

Evaluation process. There were three parts to the evaluation process. Process changes included a premeeting (between advisor and resident advisee), a group CCC meeting (only including CCC members and advisor) and post-evaluation feedback meeting (between CCC chair and advisee). All core faculty and CCC members reviewed the new process, and concerns and suggestions were solicited by the CCC chair. All faculty members who were not at the group meeting were sought out and approached directly, to reduce miscommunication and aid with implementation of the changes. See Figure 1 for process components.

Figure 1

Six medical residents, and seven faculty members had experience with process changes; these individuals completed open-ended surveys assessing their impressions of changes made. Hours spent in CCC meetings were derived from scheduling data 1 year prior to and 1 year after process changes to allow for comparison. Feedback meetings were scheduled and recorded whether they were completed within 2 weeks of evaluation to determine whether timely feedback was provided to residents.

Results

Qualitative feedback from administrative staff, faculty, and residents involved before and after implemented intervention was positive, citing improved transparency and efficiency as the greatest benefit. Time efficiency analysis showed that core faculty experienced a reduction of 46 hours per year of time spent in meetings (72% reduction). All post-evaluation meetings and 95% of pre-evaluation meetings were completed by advisors with residents.

Conclusions

Findings suggested that changes to the process were beneficial and well received by faculty, residents, and administrative staff. Despite significant reductions in time spent discussing resident progress, both faculty and residents found the changes to be a productive improvement for the program. Future process changes may include integrating residents actively into their own evaluation process to further increase transparency and educational involvement. Other programs might consider use of collaboratively identifying program-relevant assessment domains with standardized processes for assessment and feedback.

References

- Andolsek K, Padmore J, Hauer K, Edgar L, Holmboe E. Clinical Competency Committees: A Guidebook for Programs, 3rd Edition. Chigaco: Accreditation Council for Graduate Medical Education; 2020.https://www.acgme.org/Portals/0/ACGMEClinicalCompetencyCommitteeGuidebook.pdf. Accessed March 24, 2021.

- Klein R, Julian KA, Snyder ED, et al; From the Gender Equity in Medicine (GEM) workgroup. Gender bias in resident assessment in graduate medical education: review of the literature. J Gen Intern Med. 2019;34(5):712-719. doi:10.1007/s11606-019-04884-0